Up until about a year before the start of this project, I had an idea about “not becoming a laptop musician”. This idea came partly from having experienced several concerts where the musicians were looking at their laptops and displaying few signs of interacting with the musicians around them, and sometimes not even with the music played. Furthermore, I had some prejudices about the musical opportunities available from something that I regarded as being “computer music”. Working with sensors and a granular synthesis-patch made in the MaxMSP program[1] in a BOL-project, made me change my mind about this. I discovered that the computer software-based processing in this particular patch could create musical components that were very different from, and also in a sense more organic than, the sounds my outboard machines could produce. For me, much of the organic character derived from the possibilities of randomness that could be implemented during processing. It was also important that the sound that was processed came from my voice. Working with sensors as controllers was exiting, but I also experienced limitations in respect of what I actually could control and play with. I realised that MIDI-controllers with knobs and faders could give me more detailed and varied control than the sensors, and in a more physical and intuitive way than (what I experienced that) the laptop keyboard could offer. I therefore included the Novation Remote Zero MIDI controller with the computer, the audio interface and MaxMSP in my setup. I realised that I wanted the option to include several MaxMSP patches in my setup. To do this, I needed a new setup on my computer, and this led me to the Ableton Live when they introduced Max for Live.

Abletone Live with Max for Live

Ableton Live, scene view

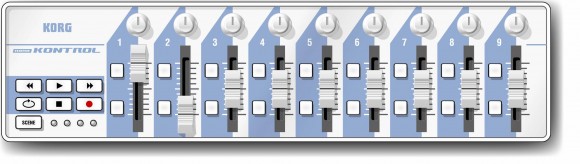

Korg Nano Kontrol, small midi-control unit

With the Ableton Live, extended to Max for Live, I have an “extra mixer”. At the moment I control it with a small Korg Nano Kontrol. This was chosen mostly because of its size, in order to maintain a good working position with my setup, and also because it seemed to have the functions I needed at the time. (I am considering replacing it with a controller with more functions, and I will return to this later on.) With this setup, I can use several MaxMSP-patches as plug-ins:

– The MaxMSP Granular/Filter Patch

– The Live Convolve Patch

– The Hadron Particle Synthesizer

These will be presented below.

MaxMSP Granular/Filter Patch

MaxMsp Granular/Filter Patch as plug-in in Ableton Live

The Granular/Filter- patch – hereafter referred to as the G/F patch ‒ was designed for me by Ståle Storløkken. This is a patch that combines granular synthesis[2] and frequency filtering. To explain the granular synthesis in simple words: the sound input is divided into small grains and played back with variables such as length of grains, density, deviation (random variation in length of grains) and pitch. All of these variables can be controlled to a certain degree. Furthermore, in this patch the sound can be filtered through a graphic EQ-filter where I can single out very narrow frequency areas, or also use a random function, to make the EQ filtering change randomly.

Example II, 4:

Usability

Remote Zero SL midi controller

Ståle Storløkken also helped me to map the different functions in the patch to the Remote Zero SL midi controller. To work with this patch in realtime, I wanted the controller to “connect” with the visual picture of the patch. As an example, the left upper knobs on the controller are assigned to each variable as listed over (pitch, density, etc.) Furthermore, the two faders to the right are assigned to frequency and gain in the filter frequency control, and the two faders to the left control the original loop and the processed loop.

The Live Convolve patch

The Live Convolve Patch (hereafter referred to as Convolution) was made by my colleges Trond Engum[3] and Øyvind Brandtsegg. It is based on the principle of convolution reverb. [4] In short: I sample a sound or a phrase, in the same way as an impulse response (see footnote). When I activate the convolution-function, the next sound I send will be convolved with the sample, and the resulting sound will be a mix of the two. I often use it to blend one vocal phrase with another.

Example II, 5:

Usability:

This is a Beta version that requires some adjustments in order to be fully functional. It is fairly easy to operate, and I am looking forward to a version that is even more suitable for a live setting. For now I have used it mostly in studio sessions and as part of live sequences with low volume.

Hadron Particle Synthesizer

The Hadron Particle Synthesizer‒ hereafter referred to as the Hadron ‒ is an open source plug-in, created by Professor Øyvind Brandtsegg and several colleges at the Music Technology Section, Department of Music, NTNU. The Hadron is based on granular synthesis, and designed to make it possible to “morph” seamlessly between different pre-programmed sound processing techniques, called states. At the moment I do not have all the functions of the patch mapped to a controller, but by using only the Korg nano kontrol, I can record sound-samples and morph the samples between the different “states”. If I want to control the so-called “expression controllers” in the patch, I will need another MIDI controller, and that would be the reason for replacing the small NANO-controller.

Example II, 6:

Usability

This patch has a lot of possibilities, and is fascinating for this reason. The morphing between states gives it a very flexible and varied character. It is very complex, and for me it has been interesting, but after a while also frustrating to explore, because it seems very difficult to control. (I will discuss the need for control and predictability in Section 2.3.) It is possible that I can find a control unit that enables me to work with the Hadron in a (for me) more logical and intuitive way, and probably this would change this situation, at least to some extent. In contrast to the Live Convolve patch, which I could implement in my setup and vocabulary immediately, the Hadron seems to be a “new instrument”. For now, I have implemented in my vocabulary just a small part of all the things that Hadron can actually do. I will discuss this choice further in the next section, but would point out that I experience the “small part of Hadron” that I use, as being sufficiently predictable, and both possible and valuable to implement in the musical vocabulary of myself and my fellow musicians.

[1] Max is a visual programming language, and in MaxMSP it is used to create sound patches. It was originally written by Miller Puckette, the Patcher editor for Macintosh at IRCAM in the mid-1980s,to give composers access to an authoring system for interactive computer music (Wikipedia).

[2] Granular synthesis is a basic sound synthesis method that operates on the microsound time scale. It is based on the same principle as sampling. However, the samples are not played back conventionally, but are instead split into small pieces of around 1 to 50 ms. These small pieces are called grains. Multiple grains can be layered on top of each other, and may play at different speeds, phases, volume and pitch. (Wikipedia)

[3] Engum, Trond: Beat the Distance, Music technological strategies for composition and production, NTNU/Norwegian Artistic Research Fellow Programme 2012, (pp. 16-20).

[4] In audio signal processing, convolution reverb is a process for digitally simulating the reverberation of a physical or virtual space. It is based on the mathematical convolution operation, and uses a pre-recorded audio sample of the impulse-response of the space being modelled. To apply the reverberation affect, the impulse-response recording is first stored in a digital signal-processing system. This is then convolved with the incoming signal to be processed. The process of convolution multiplies each sample of the audio to be processed (reverberated) with the samples in the impulse response file (Wikipedia)

05/02/2012

[…] 2.2.6 Computer, Ableton Live and MIDI-controllers […]

08/02/2012

[…] 2.2.6 Computer, Ableton Live and MIDI-controllers […]

23/11/2015

[…] G/F patch and convolution patch: 2.2.6 […]